When the UX team moved out of the technology department to be housed centrally in administration, I knew we’d expand our scope to include projects related to physical space. I didn’t know that for two weeks at the end of the fall semester, I’d be immersed in a furniture study to quickly gather feedback from students on dozens of furniture options. It was a new thing for the UX team. It was interesting. And I thought I’d share what we did.

Context

We’re undergoing a massive, multi-million dollar renovation to our Main and Science-Engineering Libraries. Part of the renovation includes new furniture for our ground floors. In early November, 2018, we received dozens of chairs, a few tables, and a couple pieces of lounge furniture to pilot for a few weeks. They were placed throughout the Main Library ground floor, with tags identifying them.

We were given a quick timeline to gather as much feedback as we could from students to guide decisions. The UX team (3 of us) worked with our new assessment librarian, Lara Miller, and staff from access services, John Miller-Wells and Michael Principe.

Methods

Michael and John created a survey with Qualtrics that people could take online or fill out in person. They have student workers dedicated to data collection who collected observational data (primarily counts of furniture usage) and transcribed the print survey results.

Lara and the UX team conducted more qualitative observations, and did some informal interviews with people using the furniture.

We placed stock photos that the companies gave us (just a selection of some of the furniture) on boards in the space and asked people to mark their favorites with sticky notes. Realizing quickly that this did little to tell us why pieces were marked, we asked participants to also describe the furniture in a few words.

Getting close to the end of the pilot, we realized we didn’t have as much feedback as we wanted on particular choices, such as the laptop tables. We also wanted to compare some specific stools and specific chairs side-by-side.

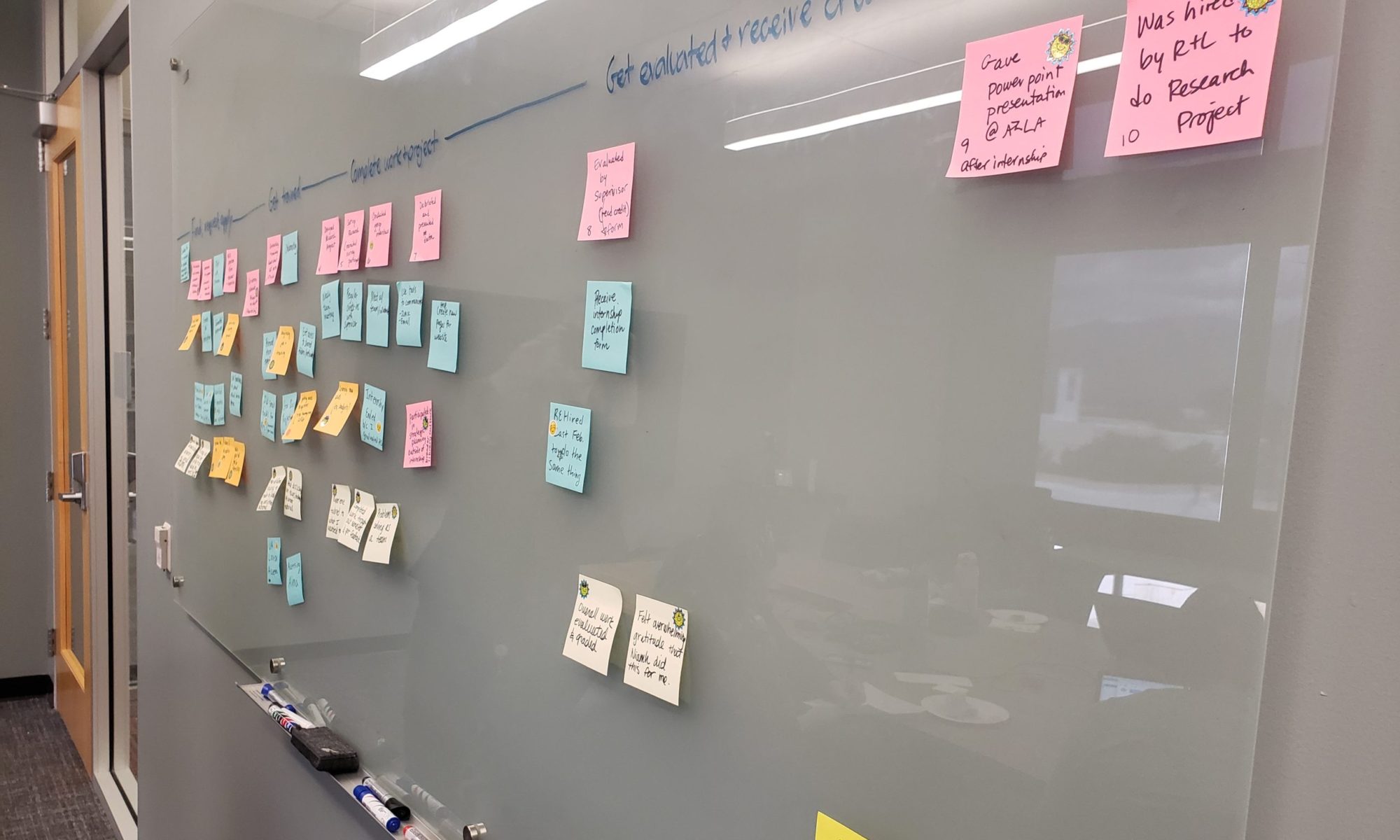

To get this feedback, we posted photos of the pilot furniture on a large whiteboard and then placed the actual furniture nearby. We asked participants to mark their favorites with green sticky dots and their least favorites with red sticky dots. (We since realized this would be an issue for colorblind users, so in future might use something like stars and sad faces instead).

Limitations

We were short on time, it was near the end of the semester, and we had a lot of furniture to get feedback on. The stock photos also didn’t match the pilot furniture exactly.

It’s hard to get authentic feedback on this type of thing. Most of the data we collected was attitudinal rather than behavioral. And if we really want to make the best decisions for our students, we should know what they do not just what they think. The best way to discover how students actually use the furniture and what they prefer might be an ethnographic study, but we didn’t have time or resources for that.

A significant issue with most of our methods is that students could vote on a chair for aesthetic reasons (color, shape) when they haven’t actually used it in any real capacity. So a chair could score highly because it’s attractive but not particularly functional, especially for long periods of study.

The decision making process at the end of the day was also unclear, as it’s a negotiation between the library project team, the architects, and the vendors. We can provide the data we collected, but then it’s essentially out of our hands.

Findings

We ended up with 283 completed surveys, 606 sticky notes on the stock photos, and 573 sticky dots on the whiteboards (we removed the stickies as we went so the boards wouldn’t get overwhelmed). We also had 13 days worth of usage data and a handful of notes from qualitative observations.

While we had a couple of hundred survey results, since each survey only referred to a single piece of furniture it was hard to make any conclusions (just 0-5 pieces of feedback per piece). We found that the comparative data was much more useful, and in retrospect would have done more of this from the get go.

We put together all the data and in December were able to present a selection of furniture we recommended and didn’t recommend for purchase.

In the chairs category, most of the recommendations were adjustable, on wheels, with arms, and with fabric seats. For stools, the ability to adjust up and down for people of different heights was especially important. Those chairs we didn’t recommend tended to have hard plastic seats or metal arms, be non-adjustable, or be less comfortable for longer term use.

The winning laptop tables had larger surfaces (to fit a laptop and a mouse/notebook), felt sturdier, and the legs could fit under a variety of chairs or tables.

Overall, we didn’t find anything groundbreaking in the data. But we do now have some solid recommendations to share with the powers that be. And we did learn a lot just through the process, which was in many ways an experiment for us:

- how to gather data on people’s attitudes around furniture

- how to act quickly and iterate on our process

- it’s possible to gather a bunch of data in a short, focused amount of time

- a mixed methods approach works best for this type of thing (as it does for most things!)